If you ask the owner of any translation agency what is preventing their company from growing, the answer will often be their lack of qualified translators and editors. Despite the thousands of CVs submitted on various portals, it is extremely difficult to find a suitable specialist, especially if the client has a limited translation budget.

One way of resolving this personnel conundrum is to train translators on the basis of their own mistakes. This is a sufficiently lengthy process, but it can provide excellent results in the long term and will enable companies to grow both professionally and quantitatively. Organizing this, however, is no easy task, and if it isn’t automated, it can also be rather costly. This article will discuss how to automate, as much as possible, the process of training translators and assessing their work.

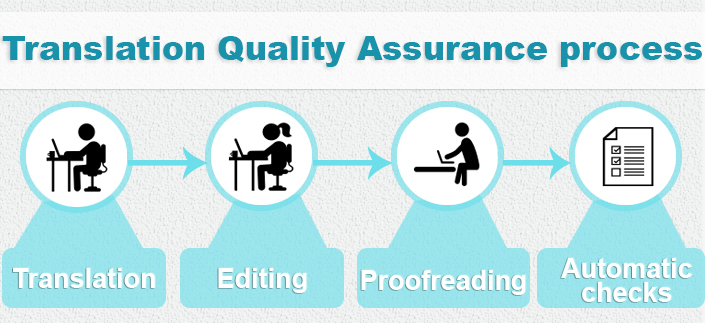

The standard quality-control process at an agency

The standard quality-control process at an agency is as follows:

After receiving a text from a translator, it is edited and proofread (sometimes by the same person), and then a series of automated checks are carried out with the help of special tools (Xbench, Verifika, etc.), after which the translation is sent to the client. If the client is satisfied, then everyone forgets about the project and moves on to the next one.

This process is like a conveyor belt, where every individual performs their own particular function. This makes it possible to ensure the quality of the translation, but all of its different stages are isolated from one another. The translator delivers the “raw materials” to the editor, and that’s where their participation in the project ends. And then the editor makes all the necessary corrections without contacting the translator.

In the end, we’re left with the following picture:

The translator has no idea what happens to the text after it’s submitted, and they don’t see the editor’s corrections. As a result, they can’t learn from their mistakes. Instead, they’ll continue repeating them in every job while, at the same time, boasting of their many years of experience.

The editor, in turn, will end up correcting the very same mistakes for years, becoming irritated by the monotony of such work.

By taking this approach, translation agencies spend more time and resources on editors and experience a certain talent gap, as their translators fail to develop. The company begins to stagnate and starts facing staffing problems. This sort of agency may be able to do quality work, but it will remain limited by its small team of professionals and won’t be able to grow.

Providing translators with feedback

In order to resolve the above-mentioned problem, agencies introduce a process of providing translators with feedback. The simplest way to do this is to tell the translator how their translation was — good or bad — and to briefly describe the kinds of mistakes they made.

However, vague comments about poor quality are often perceived by translators as personal insults, and it’s important to avoid such reactions in order to ensure that dialog is constructive. That’s why your comments need to be as specific as possible, e.g., by providing a complete list of corrections with an indication of particular types of mistakes.

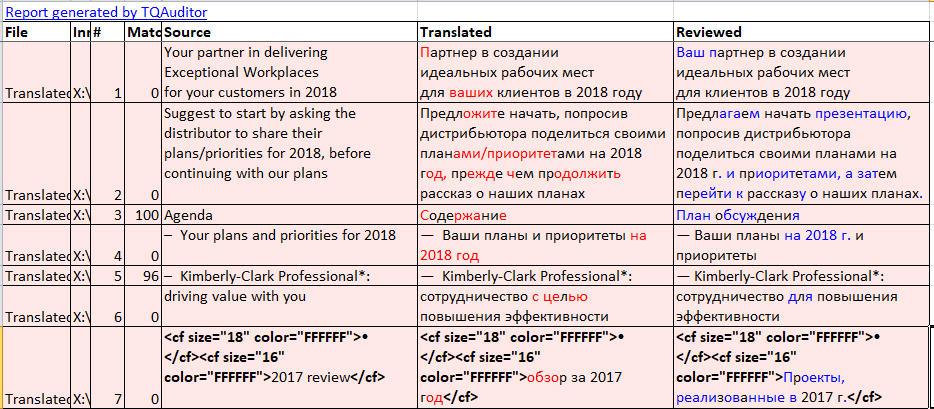

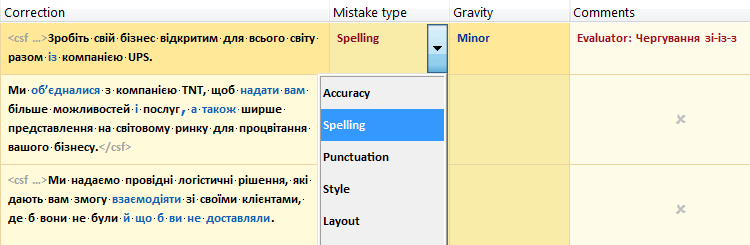

This sort of report on editorial corrections can easily be generated with the help of TQAuditor: you just need to load two versions of the file and compare them — you then receive a list of corrections that you can export into Excel:

The report can be sent to the translator as a learning tool for the future.

Error classification

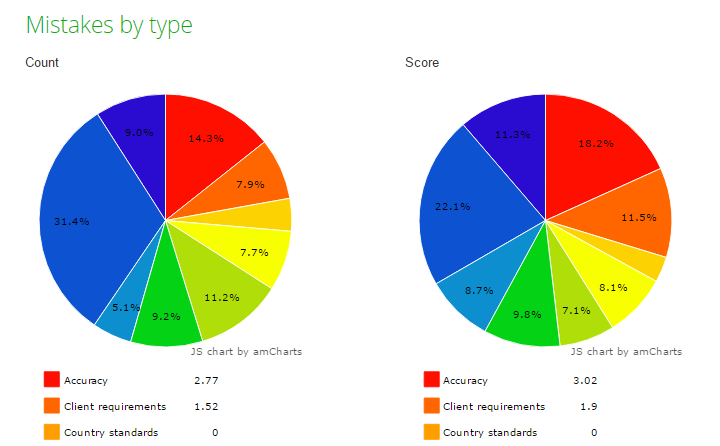

In and of itself, such a list of corrections tells us little about the quality of a translation, since it is difficult to distinguish serious errors from insignificant, preferential changes on the part of the editor. Therefore, every error can be classified by type and severity, and then you can calculate a quality score based on the number of penalty points per 1,000 words of translation.

Many agencies have developed special Excel forms for this purpose. As a result, every translator receives a file with a scored assessment of their work and can see how it was evaluated.

Coordination and communication

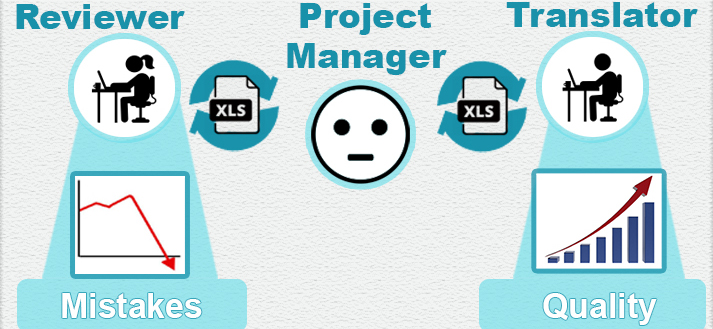

The process of assessing the quality of a translation described above looks more or less as follows:

In this case:

1. The project manager receives the assessment file from the editor and sends it to the translator.

2. The translator looks it over, adds their comments and returns the file to the project manager.

3. The project manager sends the file to the editor and asks for comments.

4. If the translator and editor are unable to agree on any particular issue, steps 1-3 may be repeated numerous times until the project manager runs out of patience.

5. The project manager receives the final quality assessment and enters it into a special file for record-keeping purposes.

This process makes it possible to train translators. It is effective: translators learn, and editors start working more quickly since the translators are making fewer mistakes. So why don’t the majority of agencies employ this process?

The project manager’s expression in the picture above should give us a hint: as a rule, project managers already have an abundance of work, and now they’re stuck with yet another task: constantly sending files back and forth between translators and editors and then recording the results of assessments in a separate file. For someone managing translation projects, this means additional boring, monotonous work, and for the agency’s owners, it means additional resources. In the end, this process is often skipped because of a lack of time. And translators are thus left with no feedback on their work.

Automation

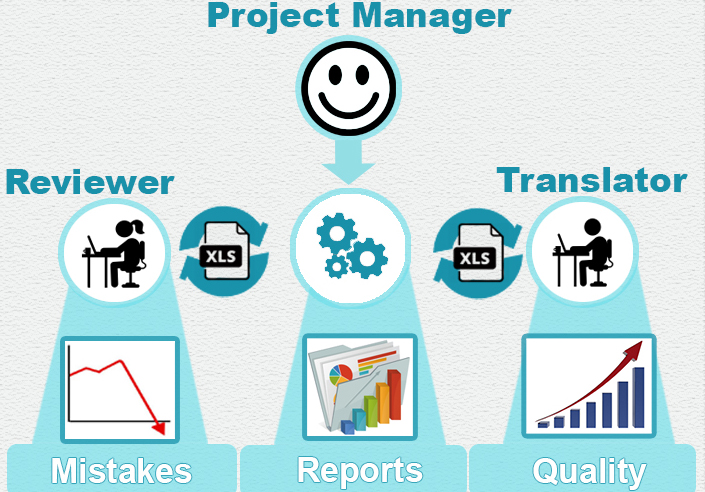

Monotonous work needs to be automated so that people can focus on creative tasks that cannot be performed by a computer. In the case of the process of evaluating the quality of translators’ work, this monotonous work involves sending and forwarding e-mails and instructions. If this task were given to a machine to do, the process would look as follows:

In this case, the project manager just needs to choose the editor and the translator and create a request for an assessment of the quality of the translation. All further actions are coordinated by the system itself: the participants in the process communicate with one another and instructions are sent to each of them at the necessary stages take place automatically. As a result, the project manager spends no more than two minutes on project coordination and receives a completed evaluation once the project has been completed.

Some agencies have developed such systems independently. This requires serious expenditures, however, which is why a more rational choice for small and medium-sized agencies would be to use an existing solution. This is what we discuss below.

TQAuditor

TQAuditor is a system that makes it possible to organize assessments of the quality of translators’ work as efficiently as possible, as well as anonymous discussions about errors between translators and editors without the involvement of the project manager. In addition, the system gathers all the data entered and generates detailed reports about the quality of the work of both the translator and the editor.

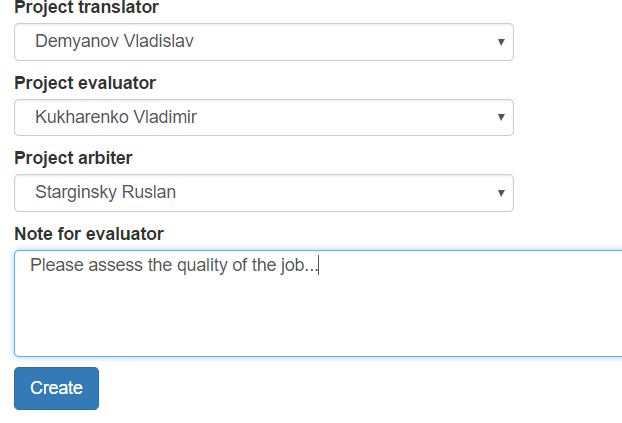

Starting an evaluation project

In order to create an evaluation project, the project manager just has to choose a translator, an editor and an arbiter and hit the Create button:

After that, the system starts working on its own, and the project manager can forget about the project.

Generating a corrections report

Immediately after a project is created, the system contacts the editor and asks them to assess the quality of a specific translation. The editor has to load two versions of the translation (before and after editing), and the system then issues a corrections report.

After the creation of the report, the system sends the translator a link to the project along with a request to review the corrections.

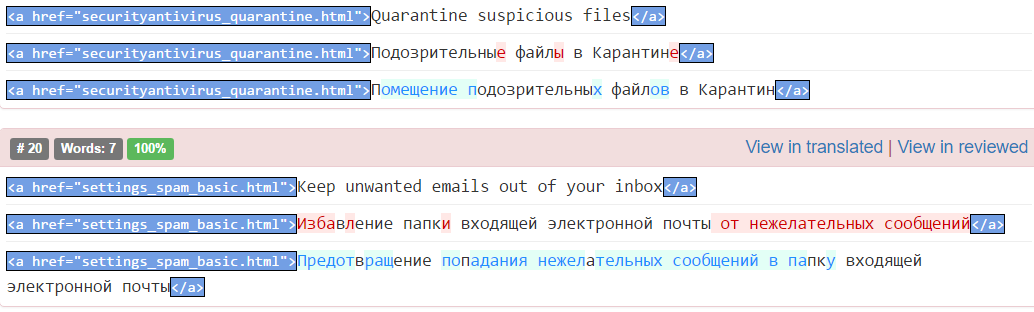

Error classification

The editor may select a fragment of the text and classify any mistakes that it contains.

Based on the number of mistakes entered, the system will use a special formula to calculate a score for the quality of the translation:

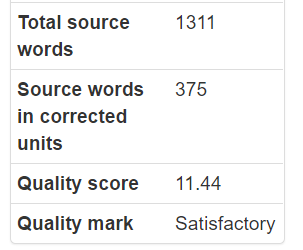

Discussion of errors between the translator and editor

When the editor completes their quality assessment, the system informs the translator by sending them a message. The translator can review all the errors and if they do not agree with the classification of any particular error, they can write a comment for the editor and then send the project back to the editor for a reassessment. The editor can then either revise their classification of the error or provide a rebuttal, with argumentation, and then send the project back. Thus, they can discuss every error with one another.

The system administrator can limit the number of times the translator can challenge errors (a maximum of three, for example). Having used up all their challenges, the translator can also appeal to the arbiter, who makes final decisions to resolve disagreements.

It is important to note that the editor and the translator don’t see each other’s names; they communicate anonymously. The only person who knows their names is the project manager. Anonymity helps facilitate the objectivity of the evaluation: those taking part in the process can focus on the text instead of on the individuals involved. Otherwise, any personal relationship between the editor and the translator might have an influence on the evaluation. Perhaps they’re friends, or maybe just the opposite, they dislike each other a little, and this can be reflected in the evaluation.

Project completion

Once the process described above has been completed, the system notifies the project manager that the assessment project has been completed. The manager can enter the system and take a look at the errors found.

As we can see, this sort of procedure for assessing the quality of a translation doesn’t require the active involvement of the project manager: a large part of the work is performed by the system.

With this approach, a single person can, in one day, coordinate up to 500 translation quality assessments, which would take a minimum of a week if the process weren’t automated. For agencies, this means saving resources for project management.

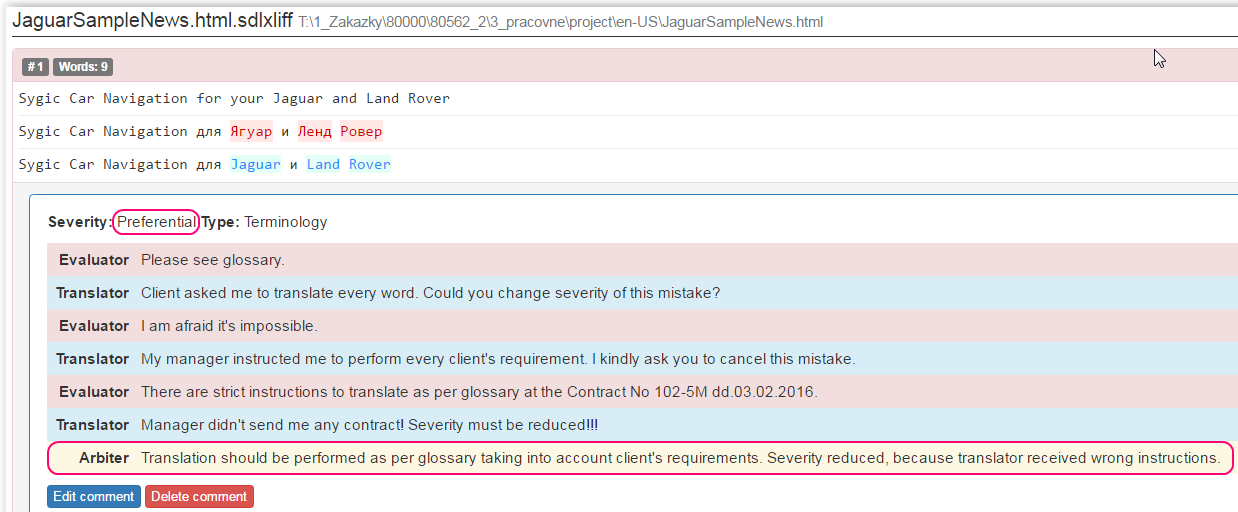

Reports on the quality of translators’ work

Quality assessment projects are saved in the system, and you can return to them at any time. In addition, on the basis of the information entered in the TQAuditor system, reports can be generated on the quality of translators’ work.

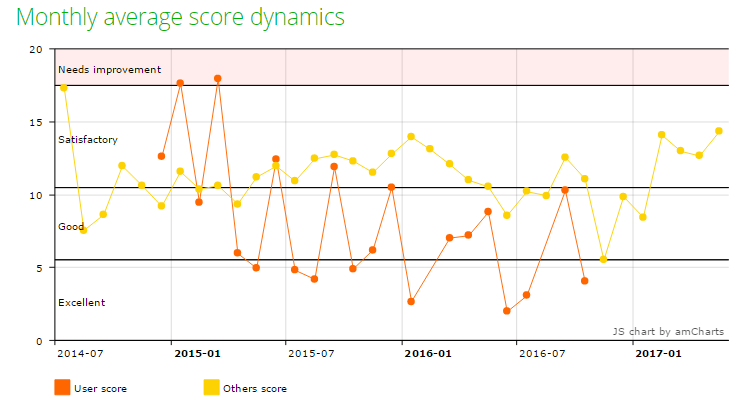

For example, you can quickly get a translator’s rating based on their average score:

You can also generate a detailed report about a particular translator to find out how the quality of their work has changed over time, where they stand in comparison with the average result for all the company’s translators, what scores they have received from each editor, the relationship between the quality of their translations and the subject matter, as well as their most common mistakes:

All of this analysis makes it possible:

1) To provide translators with detailed feedback and recommendations that can help them develop. For example, if a translator makes a lot of mistakes with punctuation, you can recommend that they learn the rules for the placement of commas and complete some exercises. In addition, translators can also see these reports, so they know what they need to improve.

2) To make proper personnel decisions, e.g., selecting the most suitable translator for a particular subject and filtering out those whose level is insufficient.

3) To create a motivational system on the basis of the scores received by translators. For example, you can award bonuses to the translators with the highest scores.

With the help of these reports, every project manager in the company can, at any time, get information about the quality of the work of a specific translator.

Why is all this necessary?

There are costs related to all of the processes described above even if they are automated. At a minimum, you’ll have to pay your editors for providing quality assessments. Wouldn’t it be simpler to skip this process altogether and earn more by decreasing the cost per project?

The answer to this question depends on the goals you’ve set for yourself. If you need to see profit right away, then it would indeed be simpler to avoid costs related to training your translators. But if you place importance on your future prospects and building a strong team, then there’s no way to get around this. After all, your business won’t grow if the people involved in it don’t grow. By training your employees, you are preparing your team for its next qualitative leap. For example, if you get a large project, you won’t need to look for external resources or turn the offer down altogether: you’ll have a sufficient number of your own translators that you have trained yourself.

In addition, your employees will be motivated by the very fact that they’re receiving feedback. They will start to clearly understand what is required of them and what their weak points are. The possibility of professional growth is a powerful motivator capable of helping you retain valuable employees. Such feedback may indeed be the reason why many translators prefer to work with you.

Using systems like TQAuditor will enable you to organize this process as efficiently as possible while minimizing costs.

.png)